Automata Theory MOOC

I’ve just finished the final exam for Stanford’s Automata Theory online course, run by Jeff Ullman. I originally chose to follow this course because 1). I wanted to learn (and in some cases re-learn) about finite automata and context-free grammars, and 2). it’s Jeff Ullman (duh). The finite automata and context-free grammar stuff was fun and useful and pretty easy, but the most interesting part of the course ended up being the material about decidability, computability and tractability (P vs. NP and all that jazz). I was re-reading Hoftstadter’s Gödel, Escher, Bach at the same time as doing the course, and so questions of decidability and computability were bouncing around in my mind.

By the Barrel

I wrote this piece of silliness (a sort of Tolkien/Lovecraft mash-up) a while ago as a writing exercise. It’s been languishing on my computer ever since. I’ve been getting behind on Haskell FFT stuff, so I thought I’d release it into the wild...

Haskell FFT 8: Benchmarking Experiments

We’re going to do a number of different things to optimise the code developed in the previous article, but before we do that, we need to do some baseline performance measurements, so that we know that our “improvements” really are improving things. The performance results we see will also help to guide us a little in the optimisations that we need to do.

Benchmarking is a fundamental activity when working with numerical code, but it can be quite difficult to do it correctly in a lazy language like Haskell. It’s very easy to get into a situation where a benchmarking loop (perhaps to run a computation 1000 times to collect accurate timing information) performs the computation on the first time round the loop, then reuses the computed result for all the other 999 times round.

We can bypass these worries quite effectively using Bryan O’Sullivan’s Criterion library. As well as providing a clean framework for ensuring that pure calculations really do get rerun for timing purposes, Criterion gives us a nice API for setting up benchmarks, running them and collecting results.

North Korea: Nothing To Laugh At

I’ve read a couple of books about North Korea recently, neither of which were much fun. The first was Nothing to Envy: Real Lives in North Korea by Barbara Demick. This was interesting partially for the “arm’s length” approach that Demick had to take–the only North Koreans she could find to talk to were escapees from the Kim regime living in the US or South Korea. Despite those limitations, Demick does a good job (as far as I can tell) of portraying the day-to-day challenges of life in North Korea. The most striking thing? The pettiness of the regime: the megalomania and the monuments to Kim père et fils is one thing, but the Songbun system of what sociologists call “ascribed status” is almost biblical in its horror. So your grandfather found himself on the wrong side of the border at the end of the Korean War? Tough luck, sister, you’re on the Dear Leader’s shit list. Three generations dumped into the “hostile class”. Fuck yeah. I’d be hostile too. No opportunities for employment, constant suspicion from the authorities and the stooges they recruit from the population (who can blame anyone for what they do in that sort of environment? any one of us would probably end up being a stooge too if we could–choice is a luxury in North Korea), and the constant fear of incarceration for one misspoken word. And all the while, the Kims live in luxury and mismanage the country into famine and destitution. Demick’s description of the kochebi, “wandering swallows”, homeless orphans whose parents had died in the famine of the 1990s, is depressing as hell.

Haskell FFT 7: Where We’ve Got To, Where We’re Going

Let’s summarise what we’ve done so far, as well as pointing out some properties of our general mixed-radix FFT algorithm that will be important going forward. We’ll also think a little bit about just what we are going to do next. Here’s what we’ve done:

Written down the basic expression for the DFT and implemented it directly in Haskell. POST

Built some “toy algebra” tools to help us understand the Fourier matrix decomposition at the heart of the Cooley-Tukey FFT algorithm. POST

Implemented a basic powers-of-two FFT. POST

Extended the “toy algebra” code to the case of general input vector lengths. POST

Implemented a full mixed-radix FFT in Haskell for general input vector lengths. POST

Ten Years

Tryfan, North Wales, 2006

Tryfan, North Wales, 2006

In our house, there are four dates that are important. We don’t do birthdays so much, and religious festivals aren’t really of interest. However, the solstices are important–they’re the “corners of the year” and if you spend as much time outside as we do, in all weathers, the length of the day makes a big difference to your mood.

Then there’s September 4th, which is the reason we spend so much time outside and why the solstices are important! That was the day a couple of years ago when we adopted our dog Winnie, changing our lives beyond all recognition, almost all for the better.

And then there’s today. Ten years ago today, Rita and I went on our first date, to a small South American restaurant in Bristol, where we were both living at the time. Ten years later, asking Rita out to dinner seems like one of the best decisions I ever made (and I’m glad she said yes!). Since then, I think that the longest period we’ve had apart was about six weeks, when I went on a long trip to Canada (before we lived there). Now, spending just a day apart feels like too much.

What do I love most about Rita? She’s compassionate, honest, funny. She cares about people and the world. Her compassion rubs off on me and makes me a better person. We laugh together so much, sometimes it hurts. After ten years, we know each others’ moods pretty well. We’ve learnt to deal with each other’s little foibles, and we support and care for each other as much as we ever can.

When I see her smile, my world just lights up. I feel as though I spend most of my days awash in an ocean of love and warmth.

So, as tough as it may be for her to deal with, I think she may very well be stuck with me now...

Coders at Work

by Peter Seibel

I like the premise of this book–find some of the best programmers out there and talk to them about their craft (or art, or science, if you prefer). First thing to say though, is that the list of interviewees tells you something about the state of the computing industry today: out of fifteen people interviewed, only one is a woman. Of the other fourteen, all of them are white men. You can argue that most of these people are pretty senior and are mostly more a product of the culture of the 1960s, 1970s and 1980s than now, but still. I’ll come back to this in a minute, because it turns out that the interview with the sole woman, Fran Allen, was one of the most interesting.

Haskell FFT 6: Implementing the Mixed-Radix FFT

[The code from this article is available as a Gist]

In this article, we’re going to implement the full “sorted prime factorisation mixed-radix decimation-in-time Cooley-Tukey FFT”. That’s a bit of a mouthful, and you might want to review some of the algebra in the last article to remind yourself of some of the details of how it works.

The approach we’re going to take to exploring the implementation of this mixed-radix FFT is to start with a couple of the smaller and simpler parts (prime factor decomposition, digit reversal ordering), then to look at the top-level driver function, and finally to look at the generalised Danielson-Lanczos step, which is the most complicated part of things. This code has an unavoidably large number of moving parts (which is probably why this algorithm is rarely covered in detail in textbooks!), so we’ll finish up by writing some QuickCheck properties to make sure that everything worksAn admission: it took me quite a while to get everything working correctly here. The powers-of-two FFT took about an hour to write an initial version and perhaps another hour to tidy things up. The mixed-radix FFT took several hours of off-line thinking time (mostly walking my dog), a couple of hours to put together an initial (non-working) version, then several more hours of debugging time..

The Teeny-Tiny Happy Hakyll TikZ Pixie

For my blog, I use Jasper Van der Jeugt’s Hakyll system. When I was first looking for a blogging platform, I rejected all the usual choices (Wordpress, etc.), mostly because I’m a borderline obsessive control freak and they didn’t give enough configurability. Plus, they weren’t Haskell. I started writing my own blogging system, but then I found Hakyll. “Great,” I thought, “that’s perfect, except I want something almost completely different!”

Haskell FFT 5: Transforming Vectors of Arbitrary Lengths

[The code from this article is available as a Gist]

So far, we’ve been dealing only with input vectors whose lengths are powers of two. This means that we can use the Danielson-Lanczos result repeatedly to divide our input data into smaller and smaller vectors, eventually reaching vectors of length one, for which the discrete Fourier transform is just the identity. Then, as long as we take account of the bit reversal ordering due to the repeated even/odd splitting we’ve done, we can use the Danielson-Lanczos lemma or its matrix equivalent to efficiently reassemble the entries in our data vector to form the DFT (at this point, you may want to review the second article to remind yourself how this matrix decomposition works).

This is all standard stuff that you’ll find in a lot of textbooks. Starting in this article though, we’re going to veer “off piste” and start doing something a bit more interesting. It used to be that, if you wanted to do FFTs of vectors whose lengths weren’t powers of two, the professional advice was “don’t do that” (that’s what my copy of Numerical Recipes says!). However, if you’re prepared to brave a little bit of algebra, extending the Cooley-Tukey approach to vectors of arbitrary lengths is not conceptually difficult. It is a bit fiddly to get everything just right, so in this article, we’ll start exploring the problem with some more “toy algebra”.

Haskell FFT 4: A Simple Application

[The code from this article is available as a Gist.]

Before we go on to look at how to deal with arbitrary input vector lengths (i.e. not just powers of two), let’s try a simple application of the powers-of-two FFT from the last article.

We’re going to do some simple frquency-domain filtering of audio data. We’ll start with reading WAV files. We can use the Haskell WAVE package to do this–it provides functions to read and write WAV files and has data structures for representing the WAV file header information and samples.

We’ll use a sort of sliding window FFT to process the samples from each channel in the WAV file. Here’s how this works:

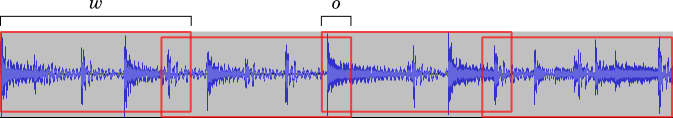

We split the audio samples up into fixed-length “windows” (each $w$ samples long) with adjacent windows overlapping by $o$ samples. We select $w$ to be a power of 2 so that we can use our power-of-two FFT algorithm from the last section. We calculate the FFT of each window, and apply a filter to the spectral components (just by multiplying each spectral component by a frequency-dependent number). Then we do an inverse FFT, cut off the overlap regions and reassemble the modified windows to give a filtered signal.

I’m not going to talk much about the details of audio filtering here. Suffice it to say that what we’re doing isn’t all that clever and is mostly just for a little demonstration–we’ll definitely be able to change the sound of our audio, but this isn’t a particularly good approach to denoising speech. There are far better approaches to that, and I may talk about them at some point in the future.

Radian UI Features

I’ve been doing some work on interactive features for the Radian plotting library recently, mostly just floating UI elements to allow you to switch between linear and log axes, to change the number of bins in histograms, and so on. There’s an article on the BayesHive blog showing some examples of how this works.

Now I just have to write some documentation to go with the spiffy new features...

Haskell FFT 3: Cooley-Tukey for Powers of Two

[The code from this article is available as a Gist. I’m going to go on putting the code for these articles up as individual Gists as long as we’re doing experimentation–eventually, there will be a proper repo and a Hackage package for the full “bells and whistles” code.]

The decomposition of the Fourier matrix described in the previous article allows us to represent the DFT of a vector of length $N$ by two DFTs of length $N/2$. If $N$ is a power of two, we can repeat this decomposition until we reach DFTs of length 1, which are just an identity transform. We can then use the decomposition

$$F_{2N} = \begin{pmatrix} I_N & D_N \\ I_N & -D_N \end{pmatrix} \begin{pmatrix} F_N & \\ & F_N \end{pmatrix} P_{2N} \qquad (1)$$

to build the final result up from these decompositions.

At each step in this approach, we treat the even and odd indexed elements of our input vectors separately. If we think of the indexes as binary numbers, at the first step we decompose based on the lowest order bit in the index, at the next step we decompose based on the next lowest bit and so on.

The Quest For Artificial Intelligence

by Nils Nilsson

Nils Nilsson has been involved in artificial intelligence research more or less since the inception of the field in the late 1950s. (He’s probably best known for his work on Shakey the robot, A* search, and his idea of teleo-reactive planning.) This makes him ideally placed to write a history of artificial intelligence.

Haskell FFT 2: Matrix factorisation

[The code from this article is available as a Gist.]

In the first article in this series, we considered a set of $N$ data values from a function $h(t)$ sampled at a regular interval $\Delta$:

$$h_n = h(n \Delta) \qquad n = 0, 1, 2, \dots, N-1.$$ and defined the discrete Fourier transform of this set of data values as

$$H_n = \sum_{k=0}^{N-1} h_k e^{2\pi i k n/N} \qquad n = 0, 1, 2, \dots, N-1. \qquad (1)$$

The original Cooley and Tukey fast Fourier transform algorithm is based on the observation that, for even $N$, the DFT calculation can be broken down into two subsidiary DFTs, one for elements of the input vector with even indexes and one for elements with odd indexes:

$$\begin{aligned} H_n &= \sum_{k=0}^{N-1} h_k e^{2\pi i k n/N} \\ &= \sum_{k=0}^{N/2-1} h_{2k} e^{2\pi i (2k) n/N} + \sum_{k=0}^{N/2-1} h_{2k+1} e^{2\pi i (2k+1) n/N} \\ &= \sum_{k=0}^{N/2-1} h_{2k} e^{2\pi i k n/(N/2)} + \omega^n \sum_{k=0}^{N/2-1} h_{2k+1} e^{2\pi i k n/(N/2)} \\ &= H^e_n + \omega_N^n H^o_n, \end{aligned}$$

where we write $\omega_N = e^{2\pi i / N}$, and where $H^e_n$ is the $n$th component of the DFT of the evenly indexed elements of $h$ and $H^o_n$ is the $n$th component of the DFT of the oddly indexed elements of $h$. This decomposition is sometimes called the Danielson-Lanczos lemma.

In this article, we’re going to look in detail at this decomposition to understand just how it works and how it will help us to calculate the DFT in an efficient way. In the next article, we’ll see how to put a series of these even/odd decomposition steps together to form a full DFT.